- #HADOOP WINUTILS.EXE DOWNLOAD INSTALL#

- #HADOOP WINUTILS.EXE DOWNLOAD PROFESSIONAL#

- #HADOOP WINUTILS.EXE DOWNLOAD DOWNLOAD#

- #HADOOP WINUTILS.EXE DOWNLOAD WINDOWS#

But I think some people will still meet (briefly). However, my winutils.exe is still unable to start, although this is my computer problem.

#HADOOP WINUTILS.EXE DOWNLOAD DOWNLOAD#

Download again and place it in the C:\windows\System32 directory.

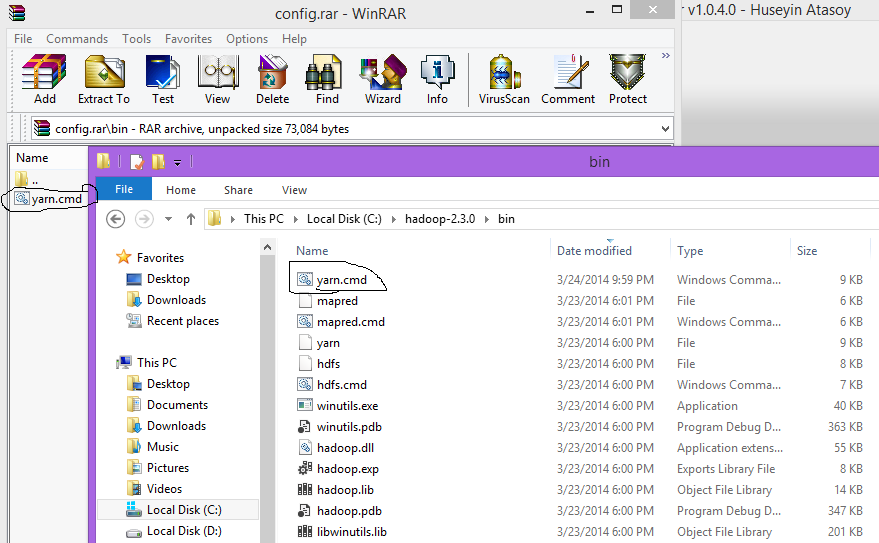

We're going to download the 2.7 Winutils.exe and make it work right.Īfter the download, it is found that the Hadoop.dll file is required. The mechanism of native Hadoop is going to call this program.

#HADOOP WINUTILS.EXE DOWNLOAD WINDOWS#

The data was found to be due to the fact that Windows local Hadoop was not winutils.exe. So I changed the Hadoop jar package to that version (2.* version should be able to, if not similar can be used) The remote Hadoop version is different from the jar package version. This is the hint version of the wrong, I see. Server IPC version 9 cannot communicate with client version 4Įrror. The name of the Output6 folder was written casually. Hdfs://192.168.85.2:9000/input/* HDFS://192.168.85.2:9000/OUTPUT6įor a little explanation, the two parameters of the entry, one is the input file, the other is the output file. I have created two Tex files in the input of HDFs, which can be used for testing or testing with other files. PackageBB Importjava.io.IOException .IntWritable .Text .Mapper .Mapper.Context Public classTestmapperextendsMapper Three classes were created under the project, namely Mapper,reduce, and main "-" Hadoop installation directory:d:\nlsoftware\hadoop\hadoop-2.7.2 " Apply "OK", "Next", "Allow output folders for source folders", "Finish"

#HADOOP WINUTILS.EXE DOWNLOAD INSTALL#

", "New", "File" Configure Hadoop Install directory.

Map/reduce project, Project Name:wordcount, "Map/reduce", "Project. This configuration is complete, and if it goes well, you can see: User name directly write to Hadoop operations user can Next, add the Hadoop service, to start configuring the connection, you need to view the Hadoop configurationġ.hadoop/etc/hadoop/mapred-site.xml configuration, view the IP and port inside the to configure Map/reduce MasterĢ.hadoop/etc/hadoop/core-site.xml configuration, view the IP and port inside the Fs.default.name to configure DFS Masterģ. Switch to Hadoop attempt, and then open the MapReduce Tools

#HADOOP WINUTILS.EXE DOWNLOAD PROFESSIONAL#

Local MyEclipse and MyEclipse connect to Hadoop plug-insįirst download the plugin hadoop-eclipse-plugin, I use Hadoop-eclipse-plugin-2.6.0.jar, downloaded and placed in the "MyEclipse Professional 2014\dropins" directory, Restarting MyEclipse will find a map/reduce option in perspective and views Remote Hadoop cluster (my master address is 192.168.85.2)Ģ. To achieve the purpose of connecting a Hadoop cluster and being able to encode it requires the following preparation:ġ. You can also create a file hive-site.Originally thought to build a local programming test Hadoop program Environment is very simple, did not expect to do a lot of trouble, here to share steps and problems encountered, I hope everyone smooth. In order to change the permissions, go to the command prompt and write: \path\to\winutils\Winutils.exe chmod 777 \tmp\hive It will change the permissions of the /tmp/hive directory so that all three users (Owner, Group, and Public)

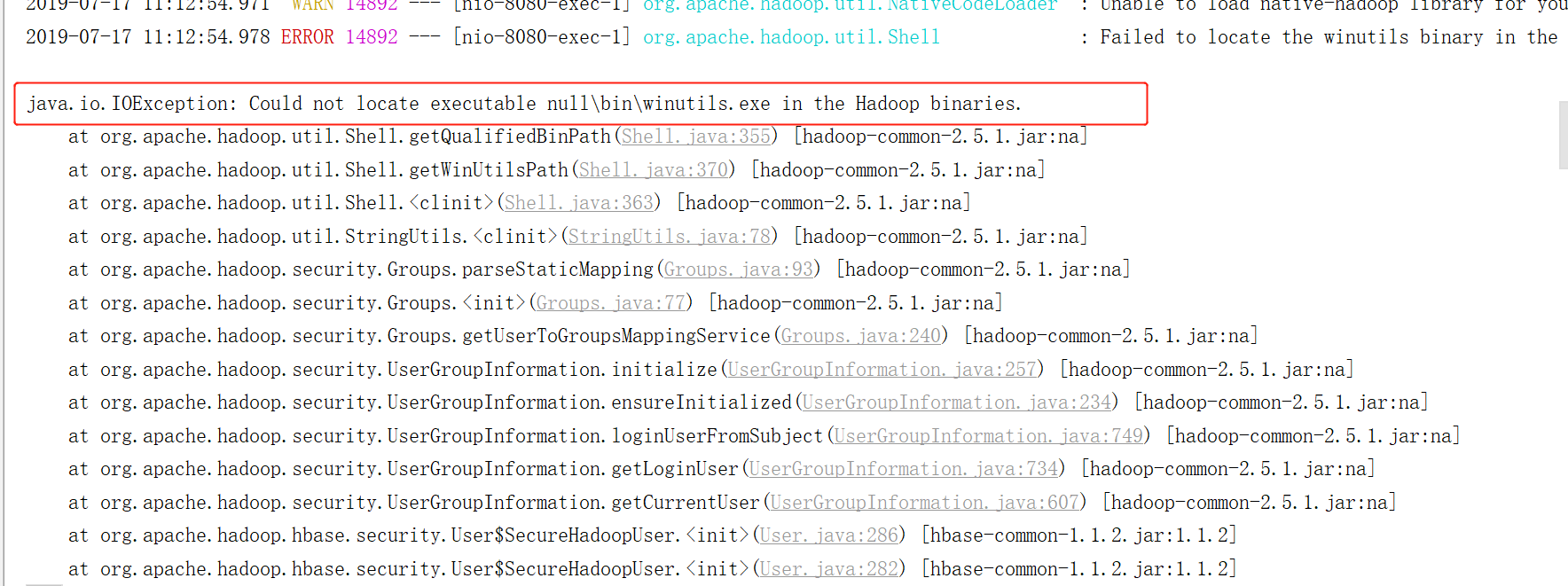

You can run a command in the command prompt which will change the permissions of the /tmp/hive directory. This is caused because there are no permissions to the folder: file:///tmp/hive. Hadoop version is often encoded in spark download name, for example, spark-2.4.0-bin-hadoop2.7.tgz.Įrror: Īt 0(Native Method)Īt (NativeConstructorAccessorImpl.java:62)Īt (DelegatingConstructorAccessorImpl.java:45)Īt .newInstance(Constructor.java:423)Īt .(IsolatedClientLoader.scala:258)Īt .hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:359)Īt .hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:263)Īt .$lzycompute(HiveSharedState.scala:39) NOTE: You need to select correct Hadoop version which is compatible with your Spark distribution. HADOOP_HOME environment to %SPARK_HOME%/tmp/hadoop or location where bin\winutils.exe is located.ĭownload winutils.exe binary from repository. This is caused because HADOOP_HOME environment variable is not explicitly set.

Error: : Job aborted due to stage failure: Task 3 in stage 2.0 failed 1 times, most recent failure: Lost task 3.0 in stage 2.0 (TID 13, localhost): Īt (ProcessBuilder.java:1012)Īt .nCommand(Shell.java:483)Īt .n(Shell.java:456)Īt .Shell$ShellCommandExecutor.execute(Shell.java:722)Īt .FileUtil.chmod(FileUtil.java:873)Īt .FileUtil.chmod(FileUtil.java:853)Īt .Utils$.fetchFile(Utils.scala:471)

0 kommentar(er)

0 kommentar(er)